CAIDAS member Damien Garreau Receives Best Paper Award at ECML PKDD 2024

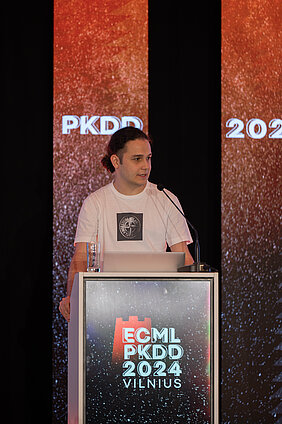

10.09.2024CAIDAS members Damien Garreau and his research assistant Magamed Taimeskhanov, together with Ronan Sicre, have been awarded a Best Paper Award at the ECML PKDD 2024 conference for their research on flaws in AI interpretability methods.

CAIDAS member Damien Garreau and his research assistant Magamed Taimeskhanov, along with Ronan Sicre from Ecole Centrale Marseille, were honored with a Best Paper Award at the ECML PKDD 2024 conference. They were recognized for their publication "CAM-based methods can see through walls", in which they address the limitations of CAM-based methods in AI interpretability.

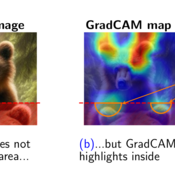

Their paper highlights an important issue with commonly-used class activation map (CAM) techniques, showing that these methods can incorrectly attribute importance to areas of an image that the model does not actually use in its decision-making. This research introduces both theoretical and experimental insights, calling for a more careful evaluation of interpretability tools in machine learning.

Damien Garreau has been a member of CAIDAS since April 2024, heading the group for Theory of Machine Learning. Magamed Taimeskhanov, who joined Damien's group in September 2024, has also been active in the field of explainable AI. Their collaboration with Ronan Sicre led to this notable achievement, showcasing the growing contributions of CAIDAS researchers to the AI community.

The Best Paper Award reflects the relevance and quality of their work in addressing critical questions about AI interpretability. CAIDAS congratulates the authors on this achievement and looks forward to their continued contributions to the field.

Summary of the Publication:

Deep learning algorithms are pervasive in virtually all applications, but their behavior can be hard to understand. This is where Explainable AI comes into play, providing key insights on how a specific decision is made. In the context of image classification, a common way to achieve this is to provide a saliency map to the user (a heatmap highlighting what the model used the most in the image). When the underlying model is a convolutional neural network (CNN), variants of CAM (class activation maps [1]) such as GradCAM [2] are typically used to produce these saliency maps. In this work, we outline a problematic behavior common to nearly all of these methods: they can highlight provably unused parts of the image. This is an issue, since end-users can be mislead in thinking that parts of the image are important for the prediction, whereas they are not.

To explain this phenomenon, we have two angles of attack. First, we conduct a theoretical analysis with on a simple CNN at initialization, proving a lower bound on the saliency map. Second, we created two new datasets, composed of images containing two animals on top of eachother. We then trained a partially blind VGG16 on ImageNet. More precisely, this network cannot see the lower 25% of the image. On the two aformentioned datasets, CAM based methods systematically highlight the lower part of the image, despite the partially blind network being insensitive to it.

To summarize, one should not take saliency maps at face value. In the future, we hope that the experimental framework we propose becomes a standard sanity check for saliency maps.

[1] Zhou et al., Learning Deep Features for Discriminative Localization, CVPR, 2015

[2] Selvaraju et al., Grad-cam: Visual explanations from deep networks via gradient-based localization, International Journal of Computer Vision, 2019

![Magamed receiving the Best Paper Award at ECML PKDD 2024 in Vilnius, Lithuania. e_mode":"AutoModule","faces":[]}](/fileadmin/_processed_/0/3/csm_magamed_2_14x6_8acc2cf80e.jpg)